We stand at a curious junction in medical history.

More than 230 million people ask ChatGPT health-related questions every week. They’re searching for answers about symptoms, treatment options, and what their lab results might mean. This represents roughly one in four of ChatGPT’s regular users turning to an AI system for medical guidance.

The behavior reveals something profound about the current state of healthcare information.

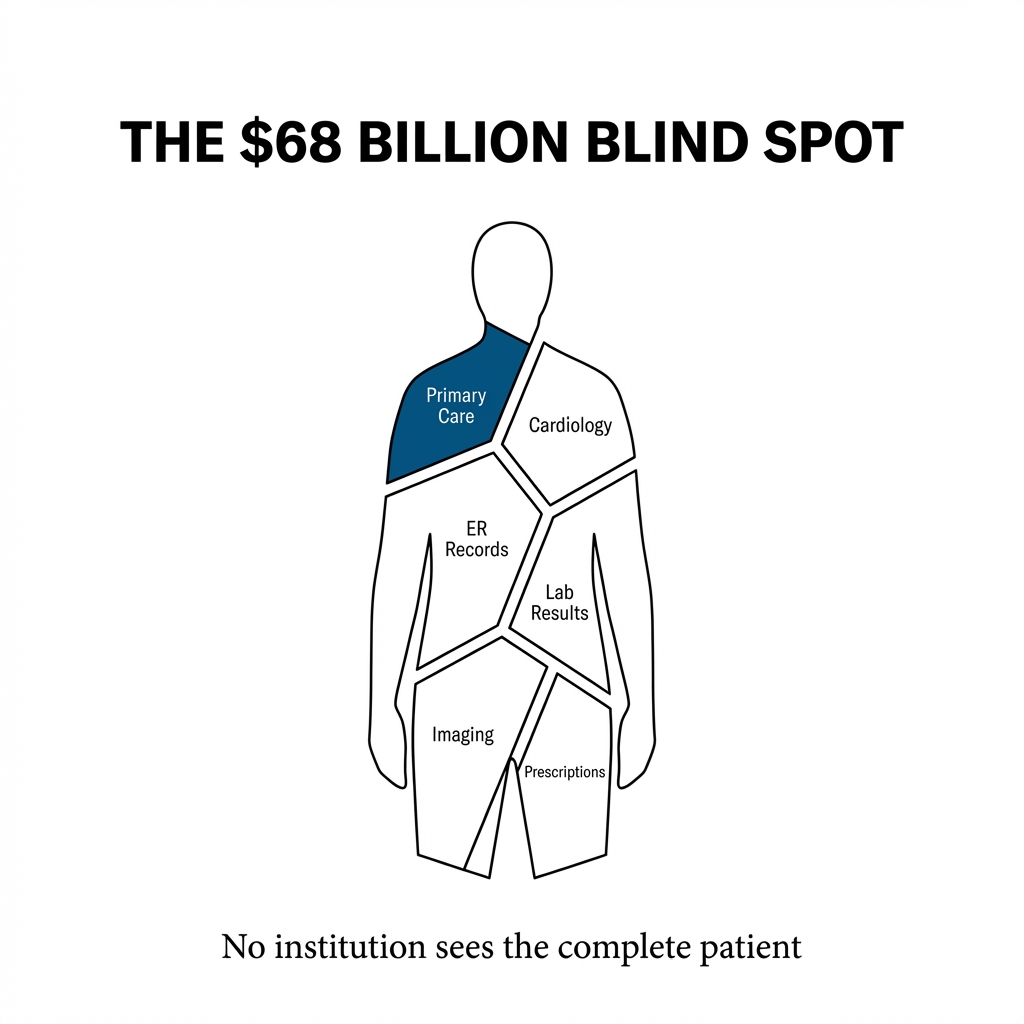

People don’t trust the system to give them complete answers because the system itself doesn’t have complete answers. Not a single U.S. medical institution holds a complete picture of any single patient. Your primary care physician doesn’t know what your cardiologist prescribed. Your emergency room doctor can’t see your imaging from last month. Your specialist operates with fragments of your medical story.

This fragmentation costs us $68 billion annually in healthcare fraud alone.

The number doesn’t account for the duplicated tests, the contradictory prescriptions, or the diagnostic delays that occur when information lives in isolated silos across thousands of healthcare organizations.

The Architecture of Disconnection

Healthcare data exists in a state of perpetual scatter.

Over 2,000 government health data sets operate at federal, state, and local levels. Each hospital system maintains its own IT platform. Each clinic follows different standards. Each insurance company builds its own walls. The privacy controls that protect patient information also prevent the kind of comprehensive view that modern medicine requires.

We’ve built a system where protection and fragmentation have become the same thing.

The consequences show up in physician workload. Reporting quality measures alone consumes an estimated $15 billion and 785 work hours per physician annually. About 45% of all malpractice claims relate to misdiagnoses. Another 10% stem from inappropriate medication problems. These aren’t failures of medical knowledge. They’re failures of information systems.

When your doctor can’t see what another doctor prescribed, the system has failed before treatment even begins.

The Patient as Integration Point

OpenAI’s ChatGPT Health, launched in January 2026, represents a different approach to the fragmentation problem.

The platform doesn’t try to force hospitals to share data or standardize systems. Instead, it positions the patient as the natural integration point for their own medical information. Users can connect medical records through b.well, link data from Apple Health, and pull in information from wellness apps like MyFitnessPal, Peloton, and AllTrails.

The patient becomes the hub.

This architectural choice matters because it sidesteps the institutional gridlock that has prevented data integration for decades. You don’t need hospitals to agree on standards when you give individuals the tools to aggregate their own information.

The development process involved collaboration with more than 260 physicians from 60 countries over two years. The focus centered on safety, clarity, and appropriate escalation of care rather than pure diagnostic accuracy.

This distinction between accuracy and judgment reveals a deeper understanding of what healthcare AI actually needs to do.

The Judgment Question

Microsoft’s AI Diagnostic Orchestrator correctly diagnosed up to 85% of complex New England Journal of Medicine case proceedings. The performance exceeded experienced physicians who achieved a mean accuracy of 20% on the same cases.

But a broader systematic review tells a more nuanced story. Generative AI models overall show moderate diagnostic accuracy of 52%. They perform significantly worse than expert physicians but comparably to non-expert physicians.

The variation matters.

Diagnostic accuracy in controlled case studies doesn’t translate directly to real-world clinical judgment. Knowing when to escalate care, when to wait, when to dig deeper into a patient’s history—these decisions require contextual understanding that extends beyond pattern recognition.

ChatGPT Health explicitly avoids positioning itself as a diagnostic tool. The platform supports users in preparing for appointments, understanding results, and making informed decisions. It doesn’t replace the clinical relationship.

This boundary setting isn’t just legal protection. It reflects a recognition that healthcare AI serves best as an amplifier of human judgment rather than a replacement for it.

The Privacy Calculation

ChatGPT Health operates outside HIPAA protections.

The platform provides technology services that fall beyond HIPAA’s scope, creating a fundamental trust challenge. Users must decide whether the benefits of integrated health information outweigh the risks of sharing that information with a commercial AI system.

OpenAI addresses this through purpose-built encryption and isolation mechanisms. Health data lives in a separate environment from general ChatGPT interactions. The architecture treats medical information as categorically different from other user data.

But architecture alone doesn’t resolve the trust question.

The platform remains unavailable to users in the European Economic Area, Switzerland, and the United Kingdom. Regulatory frameworks in these regions create barriers that OpenAI hasn’t yet navigated. Meanwhile, 19 U.S. states have enacted comprehensive privacy laws, with a slow but growing trend toward health data-specific legislation.

We’re watching regulatory fragmentation mirror the data fragmentation it’s meant to protect.

The geographic patchwork of AI healthcare services reveals how different societies balance innovation against protection. European regulators prioritize patient privacy and institutional oversight. U.S. regulators allow more experimentation while states develop their own approaches.

Neither framework has solved the underlying tension between data access and data protection.

The Competitive Landscape Taking Shape

ChatGPT Health’s launch signals the beginning of a market consolidation around healthcare data infrastructure.

Companies that establish themselves as trusted aggregators of patient information gain a structural advantage. They become the platform through which other services must operate. The network effects compound quickly when users have already connected their medical records, wellness apps, and wearable devices to a single system.

This dynamic creates winner-take-most conditions in healthcare AI.

The patient empowerment narrative serves as strategic entry point. By positioning the service as a tool for individuals to understand their own health better, OpenAI builds a user base and demonstrates value before expanding into more sensitive areas.

The progression from personal health assistant to clinical decision support to diagnostic tool follows a predictable path. Each stage builds legitimacy for the next.

But the progression also concentrates power in ways that deserve scrutiny.

The Infrastructure Layer

Healthcare AI infrastructure differs from consumer AI in fundamental ways.

The data is more sensitive. The stakes are higher. The regulatory environment is more complex. The institutional relationships matter more. Building trust with patients, physicians, and healthcare systems requires different capabilities than building a general-purpose chatbot.

OpenAI’s collaboration with hundreds of physicians represents an investment in institutional legitimacy. The focus on safety and escalation protocols addresses medical community concerns. The privacy architecture responds to regulatory requirements.

These aren’t just product features. They’re moats.

Companies entering the healthcare AI space later will need to replicate not just the technology but the relationships, the safety protocols, and the institutional trust that early movers establish.

What the User Behavior Reveals

The 230 million weekly users asking health questions on ChatGPT existed before ChatGPT Health launched.

They were already turning to AI for medical information despite the platform not being designed for healthcare. They were already sharing symptoms, medications, and test results with a general-purpose chatbot.

This behavior tells us something important about the current healthcare system.

People need better access to information about their own health. They need help interpreting results. They need support preparing for medical appointments. They need someone—or something—that can look at their complete picture and help them make sense of it.

The medical establishment hasn’t provided these tools at the scale people need them.

The demand for integrated health information has been visible for years. ChatGPT Health represents a response to user behavior that was already happening, not the creation of new demand.

This distinction matters because it shifts the conversation from “Should people use AI for health information?” to “How do we make the AI people are already using safer and more effective?”

The Path Forward

Healthcare data fragmentation won’t disappear because a new platform launches.

The institutional barriers, the regulatory complexities, and the technical challenges that created the current landscape remain. But the patient-as-hub model offers a practical path around some of these obstacles.

We’re watching the emergence of a new layer in healthcare infrastructure. One where individuals control their own data aggregation. Where AI systems help people navigate the complexity of modern medicine. Where the integration happens at the patient level rather than the institutional level.

The model has limitations. Not everyone has the health literacy to interpret AI-generated insights. Not everyone has the digital access to use these platforms. Not everyone wants to take on the cognitive load of managing their own health data.

But for the millions already seeking health information from AI systems, ChatGPT Health represents a step toward making that behavior safer and more useful.

The real test will come in how these platforms handle the inevitable mistakes, the edge cases, and the moments when AI confidence exceeds AI competence. The architecture of trust matters more than the architecture of algorithms.

We’re building systems that will shape how people understand their own bodies and make decisions about their care. The responsibility extends beyond code and data structures into the realm of human wellbeing.

The healthcare AI landscape is taking form around us. The companies that understand this aren’t just building better chatbots. They’re building the infrastructure through which future medicine will flow.

The fragmentation problem remains vast. But the direction of travel has become clearer.

Leave a comment